It’s Time to Terminate

Everything You Believe About A.I.

It’s Going to Change Your Business & Your Life Way Faster than You Think

by Bob Cooney

I recently attended the 50th anniversary SIGGRAPH conference in Denver to see some of the emerging technology in the virtual reality industry. Historically it’s a total geek fest, with computer scientists and PhDs coming together to share papers, and university researchers showcasing their latest work. There was an immersive section, and a VR theater showcasing some great storytelling experiences, but the theme of the show this year was artificial intelligence.

NVIDIA makes the graphics chips, called GPUs, found in most high-end computers and servers. AI was enabled by GPU-accelerated computing, a technology pioneered by NVIDIA in the early 2000s. It transformed the landscape of computer graphics, opening up the ability to scale computing power exponentially, leading to where we are today.

NVIDIA’s been the biggest beneficiary of the recent AI boom, with their stock price soaring 2,900% in the last five years. AI requires massive data centers with thousands of servers, each containing multiple NVIDIA GPUs. Google, Meta, Microsoft, Amazon and others are in a billion-dollar race to build more data centers to scale up their AI ambitions.

That ambition is being fueled by the number of artificial intelligence tools showcased at SIGGRAPH. If you follow the media narrative on AI, you’ll quickly learn there are two extreme beliefs, one optimistic, one not.

Tech Optimism

Artificial intelligence is a tool that will lead to planetary abundance. Human longevity will extend to hundreds of years. Humans won’t need to work, but with the increased productivity from AI and robots, we will all have universal income. Humanity will experience some kind of nirvana.

Tech Apocalypse

AI is going to take away all our jobs. It’s stealing our intellectual property. Eventually, it will become sentient, realize humans are a scourge to the planet and wipe us all out, like in the Terminator movies.

Nobody knows where this will go in the future, or how long it might take to play out. I will tell you it’s happening WAY FASTER than anyone expected. Today, there are people and companies using AI to gain an advantage over those that don’t. You want to be in the former group.

Generative AI

ChatGPT is the hype word of the year. If you haven’t used it, stop reading this article, go to ChatGPT.com and start playing. Come back when you’re done.

You’re welcome.

ChatGPT is what’s called a large language model, or “LLM.” It’s a type of Generative AI. I asked ChatGPT to describe Generative AI to someone who knows nothing about tech. It replied:

Imagine you have a robot that can create things like text, pictures, or even music based on instructions you give it. This robot doesn’t “think” like a human, but it’s trained to understand and learn from a huge amount of information, like books, websites, or images. Then, when you ask it to do something, it uses that knowledge to generate something new that looks or sounds like it was made by a person.

For example, you could ask it to write a story, and it will come up with one for you, or you could ask it to make a picture of a sunny beach, and it will create that too. This ability to create, or “generate,” is why it’s called “generative” AI. It helps people by automating creative tasks that would usually take time and effort!

Thanks, ChatGPT.

I use it all the time for creating blog and newsletter headlines, crafting summaries of articles and generating images. But increasingly, I’m using it as an “extended brain.”

Brain Extension

Most people see Generative AI as just a tool, but it’s capable of so much more. It has instant access to the world’s knowledge – scientific, business, spiritual, you name it. AI can enhance the decision-making processes and foster creative solutions.

When working with AI, the key is to ask open-ended questions. Closed questions usually get limited answers, but open-ended questions can lead to deeper thinking and creative ideas. Once you ask a question, think of it as a dialogue. Keep asking to get better and deeper answers.

Maintain Evolving AI Conversations

Iterative questioning: Start broad, then narrow it down based on responses. “How should I analyze my competition in the market?” Then, “How could I look at my pricing strategies compared to these competitors?”

Contextual Prompts: Provide relevant information to guide the AI.

• Company Data: Share relevant metrics, recent performance reports, market trends, and competitor analysis.

• Project Details: Include project goals, timelines, team roles and any current challenges.

• Customer Insights: Data on customer preferences, pain points and feedback.

• Role-Playing Scenarios: Ask the AI to adopt specific personas or roles: “As a financial advisor, how would you approach investment diversification?”

These techniques ensure your interaction remains dynamic and productive, tapping into the current potential of AI collaboration for idea generation and problem-solving.

Incredible Graphics Capabilities

As companies pour billions into research of AI technology, the tools become increasingly capable. AI’s ability to create lifelike graphics and complex simulations was showcased by dozens of companies. Some interesting ones included:

• Dynamic Environments: AI can now generate entire ecosystems that evolve based on user interaction. With voice prompts, someone can create a sketch, continue to add prompts to evolve it into a 3D model, place the model in a 3D environment, and then, create computer code that animates it all. Programmers not using AI are already becoming dinosaurs.

• Hyper-Realistic Textures: New tools enable the creation of textures that closely mimic real-world materials. You can scan a rock surface you like and apply it to an entire environment. Now, virtual worlds can look photorealistic without hundreds of hours of artist labor.

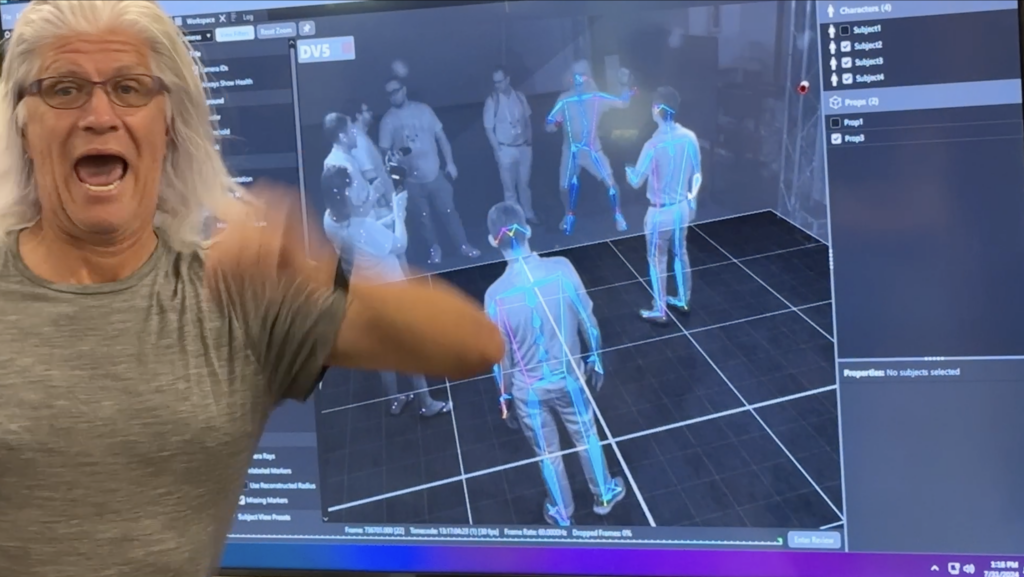

• Markerless Motion Capture: New camera-based technology enables fluid and natural character movements without wearables or tracking markers. This has huge implications for free roam virtual reality, reducing capex and labor costs while increasing throughput and customer satisfaction.

Making video games is about to get much easier. Smaller teams will create games in a fraction of the time it takes with the current standard. We already have a plethora of free roam VR content, and I see a lot more on the horizon. While arcade games still require factories and hardware integrations, as hardware platforms become more standardized, we might see content coming from interesting places. How many operators have had an idea for a “great” game but could not create it because it was too difficult?

An AI Augmented Workforce

Sometimes, it’s natural to think that bigger is better. But there’s a line of thinking in VR that smaller language models are more suited to specific tasks. The next stage of AI development will see function-specific AIs working together as teams.

Teams of smaller AI models with specific job functions will manage different aspects of a development pipeline, overseen by a central management AI. This hierarchical structure resembles an organizational chart, ensuring efficient and cohesive output.

For example, USD (Universal Scene Description) is an open standard language for the 3D internet. It describes geometries, materials, physics and behavior representations of 3D worlds. USD allows 3D artists, designers, developers and others to collaborate across different workflows and applications as they build virtual worlds.

NVIDIA developed a “Text to USD” program that automatically generates code from text prompts. This allows users to write what they want in natural languages, and the AI converts it to fully realized 3D scenes.

Insights from Industry Leaders

During their fireside chat, NVIDIA’s Jensen Huang and Meta’s Mark Zuckerberg shared their perspectives on the future of AI. Jensen talked about “Software 3.0,” the term being used where humans prompt AI to generate useable code. He said every NVIDIA engineer works with an intelligent agent to augment their coding ability and efficiency.

Mark Zuckerberg discussed how Software 3.0 will revolutionize content creation. He highlighted that “everyone will have an AI agent working with them side by side,” enabling creators to focus on higher-level tasks while AI handles intricate details. Zuckerberg suggested that you’ll soon be able to kick off weeks- or months-long workflows entirely managed by various AIs.

Virtual Agents: The Interface to AI

Jensen promised that virtual agents, powered by large language models, will transform customer service by providing personalized, efficient interactions. They’ll be taught company specific data like FAQs, menus, party packages, etc. These agents use advanced algorithms to understand and respond to user queries in real-time, offering a seamless experience that closely resembles human interaction.

Zuckerberg believes that their CODEC avatars are close to release. These lightweight, realistic virtual humans will enhance the user experience, making AI interfaces more relatable and trustworthy. This will not only improve customer satisfaction but also open up new possibilities for virtual interactions in customer support, sales and even HR.

Everyone Will Have An AI Version of Themselves

Meta’s recent release of AI Studio marks a significant leap in the realm of Creator AI. This platform allows anyone to craft an AI avatar, essentially creating a digital twin capable of interacting with audiences on their behalf. For creators constantly grappling with time constraints, these AI characters can enhance engagement by providing personalized interactions that align perfectly with their style and tone.

Meta AI Studio’s Key Features

• Customizable Avatars: Creators can design AI agents that mirror their personality and communication style.

• Training on Personal Content: These AI agents are trained using the creator’s existing material, ensuring that the interactions remain authentic and true to the creator’s voice.

• Enhanced Engagement: With AI avatars handling routine interactions, creators can focus more on high-level tasks while maintaining a strong connection with their audience.

This development brings up critical questions regarding content ownership and authenticity. If anyone can create an AI version of a public figure, how do we ensure its legitimacy? For instance, could someone unauthorizedly produce a Bob Cooney AI? (Hint: I already have.) This scenario raises pressing concerns:

• Content Ownership: Who holds the rights to an individual’s digital likeness and the content generated by their AI counterpart?

• Authenticity Verification: How can audiences trust that they are interacting with a genuine representation of the creator?

The implications are profound. As AI characters become more prevalent, establishing frameworks for verifying and protecting these digital identities becomes essential. Trust and authenticity will be paramount in this evolving landscape where sophisticated algorithms increasingly manage human-like interactions.

The Future Closer Than It Appears

AI advancements are scary if you’re paying attention. At the same time, they’re easy to ignore as the mainstream tends to focus on issues like the what can best be termed “hallucinations” of large language models (LLMs) or crazy artifacts on AI-generated images like seven-fingered hands. Yet, beneath these challenges lies relentless innovation, driven by trillion-dollar enterprises, that cannot be ignored.

“The future is already here – it’s just not evenly distributed.”

– William Gibson

Bob Cooney is a global speaker, moderator, and futurist covering extended realities and the metaverse. Widely considered the leading expert on location-based virtual reality, his mission is to prepare the industry for the change coming as these and other emerging technologies begin impacting every aspect of our business and lives. He runs the VR Arcade Game Summit at Amusement Expo and the VR Collective as manifestations of that mission. Follow him at www.bobcooney.com.